Learning Contextualized User Preferences for Co-Adaptive Guidance in Mixed-Initiative Topic Model Refinement

Abstract

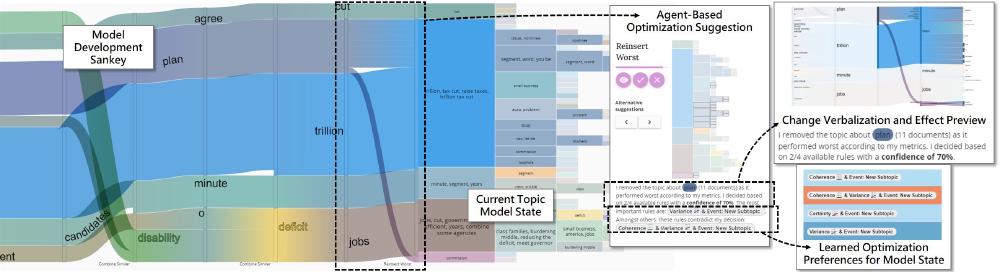

Mixed-initiative visual analytics systems support collaborative human-machine decision-making processes. However, many multi-objective optimization tasks, such as topic model refinement, are highly subjective and context-dependent. Hence, systems need to adapt their optimization suggestions throughout the interactive refinement process to provide efficient guidance. To tackle this challenge, we present a technique for learning context-dependent user preferences and demonstrate its applicability to topic model refinement. We deploy agents with distinct associated optimization strategies that compete for the user’s acceptance of their suggestions. To decide when to provide guidance, each agent maintains an intelligible, rule-based classifier over context vectorizations that captures the development of quality metrics between distinct analysis states. By observing implicit and explicit user feedback, agents learn in which contexts to provide their specific guidance operation. An agent in topic model refinement might, for example, learn to react to declining model coherence by suggesting to split a topic. Our results confirm that the rules learned by agents capture contextual user preferences. Further, we show that the learned rules are transferable between similar datasets, avoiding common cold-start problems and enabling a continuous refinement of agents across corpora.